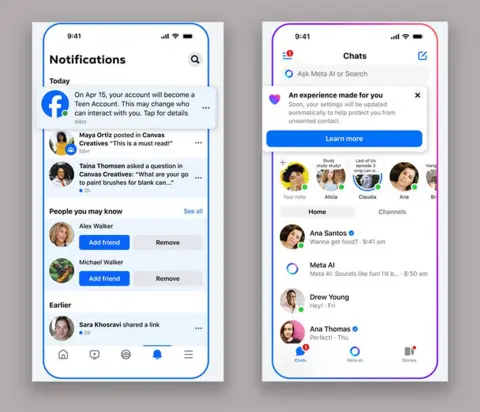

Meta expands Teen Accounts to Facebook and Messenger

Technology correspondent

Gety pictures

Gety picturesMeta expands teenagers’ accounts – what it considers its appropriate experience for age under the age of 18 – to Facebook and Messenger.

The system includes the status of younger teenagers on platforms in more bound settings, with parental permission required for live broadcast or stopping the protection of images of messages.

It was first It was presented last September On Instagram, which Meta says, “I mainly changed the experience of teenagers on the platform.

But activists say it is unclear, what is the difference that adolescents’ accounts have already caused.

“Eight months after the dead teenagers’ accounts were published on Instagram, we had silence from Mark Zuckerberg about whether this was actually and even the sensitive content he is already treating,” said Andy Buroz, CEO of Molly Rose Foundation.

He added that it was “horrific” that parents still do not know whether the settings prevented their children “recommended by an inappropriate or harmful content.

Matthew Sawimimo, head of a participant in the safety of the child online at NSPCC, said that Meta changes “must be combined with pre -emptive measures, so the dangerous content on Instagram, Facebook and Messenger do not multiply in the first place.”

But Drew Benfy, CEO of Social Media Consulting Bathanhal, said it was a step in the right direction.

“For one time, Big Social is fighting for the position of driving not for the base of the most involved teenagers, but for the safer,” he said.

However, he also indicated that there is a danger, as with all platforms, adolescents can “find a way to overcome safety settings.”

The launch of expanded teenagers in the United Kingdom, the United States, Australia and Canada begins from Tuesday.

Companies that provide common services in children have faced pressure to introduce parental controls or safety mechanisms to protect their experiences.

In the United Kingdom, they also face legal requirements to prevent children from facing harmful and illegal content on their platforms, under the online safety law.

Roblox recently enabled parents To prevent games or specific experiences on the very common platform As part of a set of controls.

What are the accounts of adolescents?

How teenagers work depend on the age of the user.

Those between the ages of 16 and 18 will be able to switch virtual safety settings such as setting their own account.

But children between the ages of 13 and 15 must obtain permission from parents to stop such settings – which can only be done by adding one of the parents or guardian to their expense.

Mita says she has moved at least 54 million teenagers worldwide to adolescent novels since her presentation in September.

It says 97 % of children between the ages of 13 to 15 years also kept their compact restrictions.

The system depends on the fact that users are honest in their life when preparing accounts – with Meta using methods such as personal photos of the video to verify their information.

He said in 2024 that he would start using artificial intelligence (AI) to identify teenagers who may lie around their age in order to return them to adolescent accounts.

The results published by the UK media organizer in November 2024 indicated that 22 % from eight to 17 years of age at 18 years or more on social media applications.

Tell some teenagers BBC It was still very easy to “lie about their age on platforms.

Dead

DeadIn the coming months, younger adolescents will also need parents’ approval on the air on Instagram or stop nudity protection – which blurms nude images suspected of direct messages.

Fears about children and adolescents who receive unwanted nude or sexual images, or a feeling of pressure to participate in potential fraud, have prompted dead calls to take strict more action.

Professor Sonia Livingstone, director of the Children’s Digital Future Contracts Center, said that the expansion of dead in adolescents’ accounts may be a welcome step in the midst of “an increasing desire from parents and children for the appropriate social media.”

But she said that the questions remained on the protection of the total youth from the Internet from the Internet, “as well as its practices that depend on the data and market it very much.”

“You must be dead responsible for its effects on young people, whether they use the teenager account,” she added.

Mr. SOWEWIMO from NSPCC said it is important that accountability for maintaining the safety of children on the Internet, through safety controls, did not fall to the parents and children themselves.

“Ultimately, technology companies should be responsible for protecting children on their platforms and OFCOM needs to keep them to calculate their failures.”